Have you ever wondered how the smart chatbot you’re talking to actually works?

ChatGPT might seem like it understands you perfectly, but the truth is far more complex.

Here are five key facts that explain how AI chatbots like ChatGPT operate, what makes them useful—and at times—misleading.

1. It’s Not Just AI—Humans Are Behind the Scenes

Although chatbots like ChatGPT operate by artificial intelligence, human trainers play a critical role in shaping its responses.

Initially, the model is trained on massive amounts of text to learn how to predict the next word in a sentence. But that alone isn’t enough.

That’s where human feedback comes in: reviewers correct and guide the model’s responses.

Especially on sensitive or ethical topics, to make them safer and more reliable.

For example, if asked about a dangerous or discriminatory topic, the human reviewers shape the model’s answers to reflect ethical values and responsible behavior.

2. It Doesn’t Read Words Like Humans—It Analyzes “Tokens”

Unlike us, who read full words, ChatGPT operate in a way that breaks down text into smaller units called tokens—which could be a word or part of one.

For example, the sentence “ChatGPT is marvellous” is internally split into: “chat”, “G”, “PT”, “is”, “mar”, “vellous”.

This token-based process sometimes leads to misinterpretations, especially with complex languages or uncommon words.

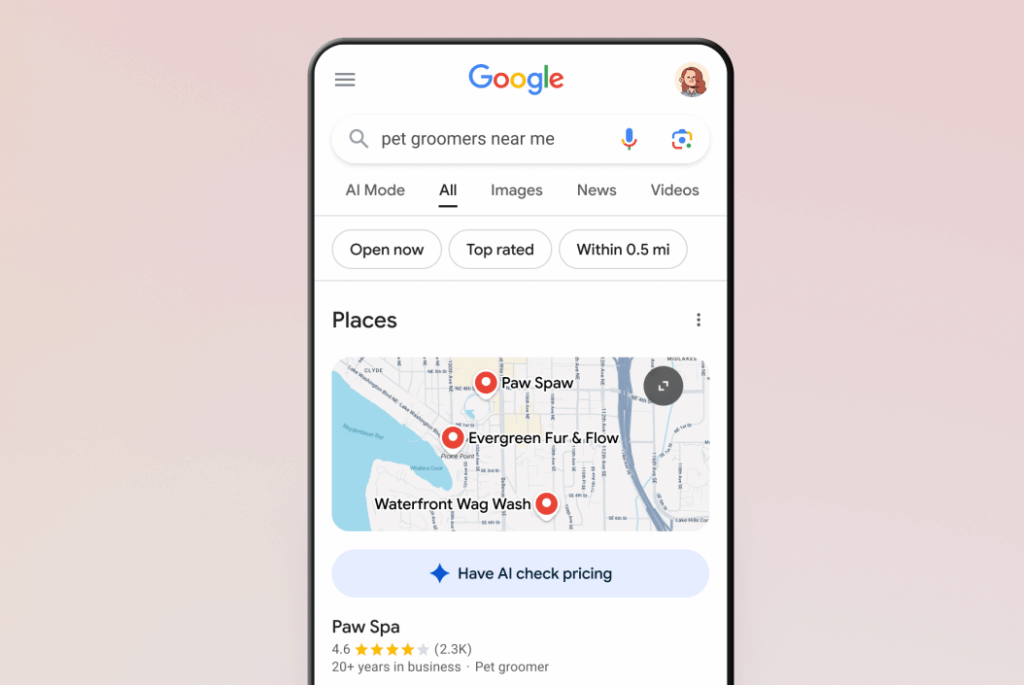

3. It Doesn’t Know What’s Happening Right Now

Despite its intelligence, ChatGPT doesn’t have real-time awareness.

It can’t know what happened in the world after its training cut-off date.

For instance, if you ask, “Who won the 2025 elections?”, it won’t know—unless it’s connected to the internet or a live search tool like Bing.

Even then, it uses filtered search results based on reliability—but the process is still limited and computationally expensive.

4. It Can “Hallucinate”—Confidently!

One of ChatGPT’s known issues is something called “hallucination”—when it provides wrong information with full confidence.

It might invent sources, cite fake studies, or misattribute quotes to non-existent books.

Despite advances in fact-checking, hallucinations remain a challenge, so it’s wise to verify any critical or sensitive information it provides.

5. It Doesn’t Do Math Like You Think—It Uses a Built-In Calculator

ChatGPT doesn’t “calculate” the way humans do.

When faced with complex math problems, it analyzes the problem and sends the numbers to an internal calculator tool.

This is known as “step-by-step reasoning”, allowing the model to logically solve problems—especially when the right tools are used.

Chatbots like ChatGPT operate in highly intelligent way, but they still models trained by people and driven by patterns—not understanding.

Knowing how does ChatGPT operate helps you use it responsibly and effectively, whether for learning, content creation, or everyday tasks.