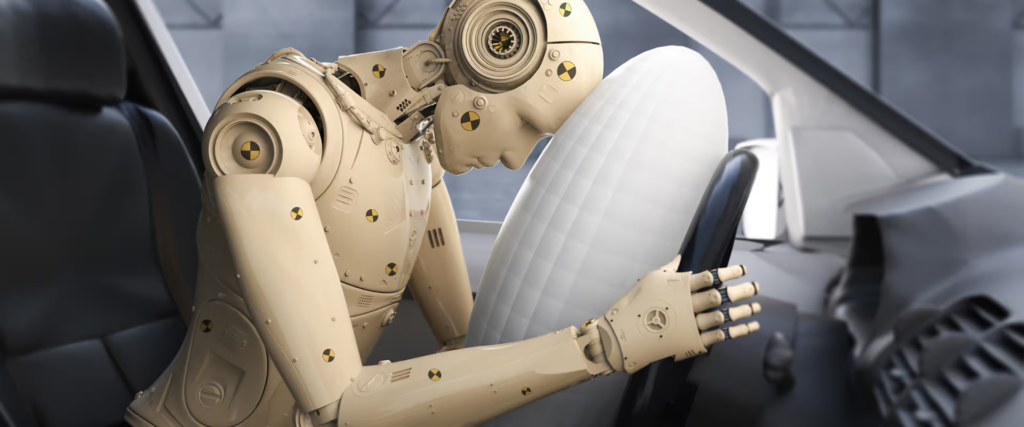

Physical AI safety standards are becoming a big topic now because people want machines that move in the real world to act safe like cars on the road. Physical AI safety standards is a term many engineers use to explain how rules from automotive safety can guide robots and machines that work near humans. When experts talk about physical AI safety standards they explain that if cars can follow strict systems to protect drivers then machines that walk lift and carry things should follow something close. That is why physical AI safety standards is repeated more in new research because people know it builds trust.

Physical AI Safety Standards in Real Machines

This is the main place where AI safety systems become useful. Many teams like to look at car rules because cars have a long history of testing and checkups. They take the idea of layers of defense where one part of the system checks another. In the automotive world that means a car cannot move fast if sensors fail. In the same way AI safety systems can stop a robot when the camera or motor does not act right. Some companies already started to put these ideas in warehouses so workers feel safe. Engineers say AI safety systems keep things simple because the rules are clear and easy to follow.

How Old Car Safety Rules Inspire New AI Work

One sub idea is how old car methods can help build physical AI protection in a new way. Cars use a lot of small check systems and that style fits robots too. Workers in factories see a robot and want to know it will not turn fast or fall over. So physical AI protection tries to copy car ideas like slow stops backup sensors and safe modes. Developers say physical AI protection can also help them test robots faster because the steps for testing are already understood from automotive work. That saves money and time and makes the machines more ready for public places.

Why Strong Safety Matters When AI Moves in the Real World

Many people trust phones and screens but when a machine moves in real space they worry more and this is why physical AI protection must be clear. If a robot helps in hospitals or carries heavy boxes then any mistake can cause harm. People want to know the robot can slow down or pause when needed. So physical AI protection helps show that someone checked the system deeply. Researchers say robots will enter more homes in the next years and physical AI protection will guide how much people trust them. Without these rules the risk grows which is why leaders push for strong rules.

Read Also: GLOBAL ECONOMY: THE IMPACT OF TRUMP’S TARIFF

The Future of Building Safer Moving Machines

When we think about the future many experts say physical AI protection will not stay the same. More groups will join and add new steps from aviation and smart factories. Some want robots to have a clear report so people can read how safe the machine is. Others want physical AI protection to include training for people who work near these robots. The idea is simple if cars learned to become safe over many years then robots can learn the same. physical AI protection will keep growing as robots spread into streets markets and airports. The more common the machines get the more the safety rules will matter.